Mind the Gap on Privacy and... unbundling Google Analytics, Beyoncé and the Data Mesh.

👋 Hello!

When we started Mind the Gap, our promise was to deliver a few items to wonder and ponder about privacy and (data) engineering and how to bring them closer together.

Today's episode does just that, but with a bit more background than usual as some Big Questions are shaping the practical privacy discussions of today:

- With privacy authorities still hammering Google Analytics, we thought to offer some guidance on how to think about the layers you will have to replace if you want to de-risk and move away from it.

- As a lighter intermezzo, Beyoncé and Jay Z have a thing or 7 to say about Privacy as a coordination problem;

- Moving away from GA and solving coordination issues between privacy and engineering are probably part of a wider privacy programme. Data Mesh is a new paradigm for organising the data function, and we've been thinking about privacy's place in it.

Let me know what you think, spread the word to aspiring subscribers and if we can help... you know how to identify and localise us 😉 .

Ciao!

-Pim

The Google Analytics saga: consider unbundling it to take control.

Another week, another flurry of "Don't use Google Analytics" from Privacy Authorities. We're counting the Dutch, French, Italian and Austrian DPA's among the ones that have now taken position against its usage.

But Google Analytics is just as widely used as it was a week ago. And despite some very concrete answers to proposed solutions, there still is no practical guidance on how to deal with an illegal Google Analytics. Here in NL, the association of e-commerce retailers is even chasing the ministry of Justice for guidance "or a whole sector will violate the law".

So we have an entire market (vendors and organisations alike) chasing the silver bullet that will give Google Analytics the checkmark again ✅ .

Waiting for the silver bullet to hit may turn out to be expensive time lost. The reason: Analytics as a product is a bundle of value functions. Privacy - and so the control point you should look at- is in the base layer the other value functions build upon. And that is exactly what you shouldn't expect from Google to give up on.

We get a lot of questions about it ("you guys do privacy and data, right?"). And while STRM is closely related to the data dimension, we do something more specific compared to the package a tool like Analytics offers.

All-in, this might be an important opportunity to take control for reasons beyond privacy pressure and reduce strategic lock-in. That does require a change of perspective.

Lend a hand

The issue with external pressures like these is the messaging is often only focused on what you can't do. We have been vocal about offering concrete guidance to the market instead of just a bunch of njets and feel that's missing from this conversation too.

We wanted to offer some additional understanding and guidance (albeit high-level) by dissecting if there's a setup that allows you to drive more value with lower dependence and better compliance.

The good news: there is. The bad news is it comes with a lot of work.

So first, the question of why the entire world is on Google Analytics anyway.

Read on in the blog:

Building privacy programmes according to BEYONCÉ

Privacy as a subject is quite heavy on the stomach - ref that Google Analytics issue above...., or a simple question like what is identification? But as privacy might slowly become a shareholder demand, any digestible primers on how to design privacy programmes if you're not a privacy engineer are welcome.

So, an example where Privacy meets Pop Culture is definitely worth sharing.

On the lookout for the video of a panel discussion at the 2022 RSA Conference I came across a talk by privacy engineer Ayana Miller (then at Pinterest). With some LAF (Liberal Acronym Fabrication) she outlines 7 commandments to bring Privacy by Design into your organisation, processes and systems:

On a fundamental level, Ayana paints a picture of "privacy" as a coordination problem to (large) organisations. All-in, it's an interesting talk if you're designing privacy programs and aligns with many of the assumptions STRM is turning into a product. It is also a much lighter snack on privacy processes than your average law text. I found the first part most interesting as it's condensed and elegantly highlights the challenges through that cheeky acronym.

The video (slides + audio) is below. If you lack the time to listen it out, these are my main takeaways:

- Jot down: policy to purpose of collection, make sure data is traceable.

- Always be explicit: what is the policy, what is the purpose of data?

- Y'all have to keep course. Changes (process or system-level) render data unusable, uncompliant or make the privacy function wobbly and untrusted.

- Zoom in on the objectives of what teams want to achieve with data early and work from there, don't just assess the impact on release. Additional take from Pim: and make sure your DPIA's focus on application areas instead of just applications or use cases.

So what if you could decouple and remove the coordination between privacy and engineering to reflect the paper reality into the data itself? What if you can make sure data cannot be used beyond purpose instead of reviewing every single feature? Spoiler: that's what we do. 😇

The Cost of Coordination: Privacy's place in the Data Mesh

The topics of removing the (cost of) coordination through decoupling is also at the core of Data Mesh, an up and coming perspective on organising the data function. And if there's data, there's privacy.

Traditionally, data is "just there" for many users and consumers in an organisation. With the data team as the mining function, and product teams using that ore to press the bodywork for the cars they sell to consumers.

Industrial data production lines

But as organisations start to harvest more value from data, intermediate steps that were previously part of the product development cycle become products in itself: there's now teams that turn the ore into beams of different shapes and sizes before handing it over to the production line for the chassis. Instead of producing made-to-measure inputs, these teams are delivering "standard" beams that have many, sometimes even unknown, potential applications in downstream production. Your data architecture and -organisation as a micro-level economy.

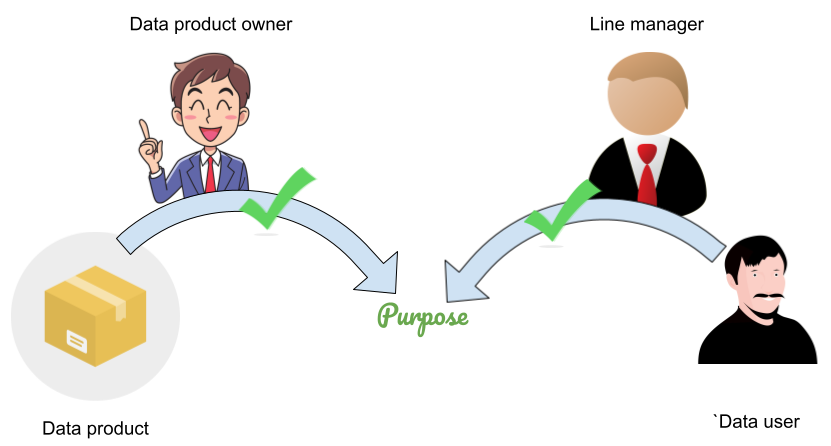

This is the data mesh on a conceptual level: a network of data products that together create "living" data value chains and decouple the acquisition and refinement of data from the potential value application.

Enter privacy

There is just one important difference between the industrial analogy and data in real life: if in any way related to people, data is personal.

And so privacy adds a whole new dimension to the problem data mesh is trying to solve: instead of just dumping everything into a data lake to enable data value creation, the personality dimension and implications for downstream usage should be kept, traced and decoupled from potential data product usage. It is the opposite of thinking about personal data as as a diluted drop of ink in a (data) lake.

While pondering the question of the position and role of privacy in data mesh architectures. we came across an article by Wannes Rosiers on the exact topic of Privacy inside a Data Mesh.

Head over to Medium for some insight into potential road blocks and solutions to deal with privacy inside a data mesh architecture:

Channel some insight to the market: Guernsey DPA sets an example

As you will recall by now, we started STRM on the observation there is a (large) gap between the paper realities of privacy and privacy regulations and the deep engineering and system challenges it provides. And we've been vocal about how privacy authorities can better advance on their own goals by providing concrete guidance on how to comply.

So I was happy to see the island of Guernsey propose a checklist for local businesses on the topic of cybersecurity. Now, Guernsey is not the world's leading island by population, but it's always easier to talk about something in the light of an example. And now we have one:

That's it!

And... that's it for this week! Did I mention we're working to launch as the newest addition to AWS marketplace? I'll make sure to let you know when we're there!